DBRX, An Open-Provide LLM by Databricks Beats GPT 3.5

The company behind DBRX said that it is the world’s strongest open-source AI mode. Let’s check out the best way it was constructed.

Highlights:

- Databricks not too way back launched DBRX, an open general-purpose LLM claimed to be the world’s strongest open-source AI model.

- It outperforms OpenAI’s GPT-3.5 along with current open-source LLMs like Llama 2 70B and Mixtral-8x7B on commonplace commerce benchmarks.

- It is freely obtainable for evaluation and enterprise use by means of GitHub and HuggingFace.

Meet DBRX, The New LLM in Market

DBRX is an open and general-purpose LLM constructed by Databricks to encourage purchasers to migrate away from enterprise choices.

The employees at Databricks spent roughly $10 million and two months teaching the model new AI model.

DBRX is a transformer-based decoder-only LLM that is expert using next-token prediction. It makes use of a fine-grained mixture-of-experts (MoE) construction with 132B full parameters of which 36B parameters are energetic on any enter. It has been pre-trained on 12T tokens of textual content material and code data.

Ali Ghodsi, co-founder and CEO of Databricks, spoke about how their vision translated into DBRX:

“At Databricks, our vision has always been to democratize data and AI. We’re doing that by delivering data intelligence to every enterprise — helping them understand and use their private data to build their own AI systems. DBRX is the result of that aim.”

Ali Ghodsi

DBRX makes use of the MoE construction, a form of neural neighborhood that divides the coaching course of amongst various specialised subnetworks generally called “experts.” Each skilled is proficient in a specific aspect of the designated course of. A “gating network” decides how one can allocate the enter data among the many many specialists optimally.

Compared with totally different associated open MoE fashions like Mixtral and Grok-1, DBRX is fine-grained, meaning it makes use of an even bigger number of smaller specialists. It has 16 specialists and chooses 4, whereas Mixtral and Grok-1 have 8 specialists and choose 2. This provides 65x additional attainable mixtures of specialists and this helps improve model prime quality.

It was expert on a neighborhood of 3072 NVIDIA H100s interconnected via 3.2Tbps Infiniband. The occasion of DBRX, spanning pre-training, post-training, evaluation, red-teaming, and refinement, occurred over three months.

Why is DBRX open-source?

Currently, Grok by xAI will be made open-source. By open-sourcing DBRX, Databricks is contributing to a rising movement that challenges the secretive methodology of fundamental firms inside the current generative AI progress.

Whereas OpenAI and Google keep the code for his or her GPT-4 and Gemini large language fashions intently guarded, rivals like Meta have launched their fashions to foster innovation amongst researchers, entrepreneurs, startups, and established corporations.

Databricks objectives to be clear regarding the creation technique of its open-source model, a distinction to Meta’s methodology with its Llama 2 model. With open-source fashions like this turning into obtainable, the tempo of AI enchancment is predicted to remain brisk.

Databricks has a particular motivation for its openness. Whereas tech giants like Google have swiftly utilized new AI choices thus far 12 months, Ghodsi notes that many huge firms in quite a few sectors have however to undertake the experience extensively for his or her data.

The aim is to assist firms in finance, healthcare, and totally different fields, that need ChatGPT-like devices nonetheless are hesitant to entrust delicate data to the cloud.

“We call it data intelligence—the intelligence to understand your own data,” Ghodsi explains. Databricks will each tailor DBRX for a shopper or develop a customized model from scratch to go effectively with their enterprise desires. For fundamental corporations, the funding in making a platform like DBRX is justified, he asserts. “That’s the big business opportunity for us.”

Evaluating DBRX to totally different fashions

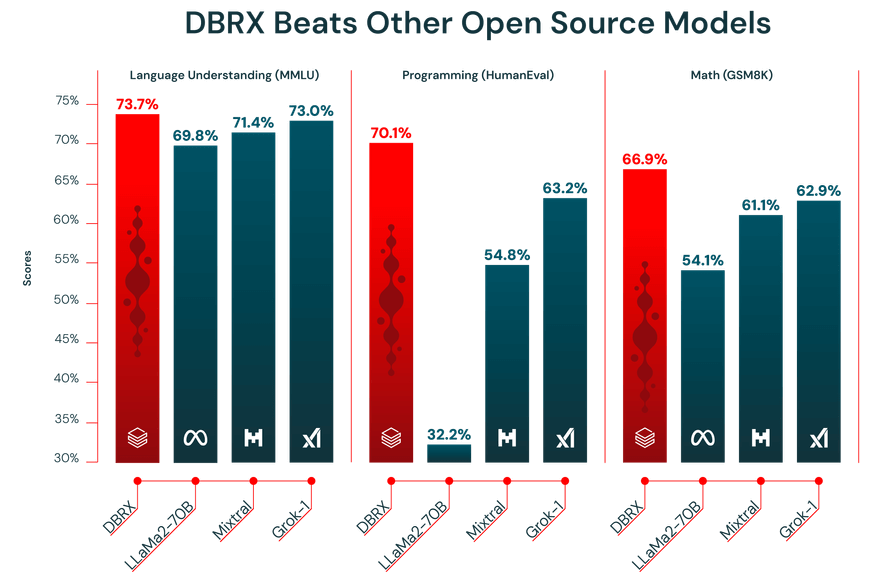

DBRX outperforms current open-source LLMs like Llama 2 70B and Mixtral-8x7B on commonplace commerce benchmarks, equal to language understanding (MMLU), programming (HumanEval), and math (GSM8K). The decide beneath reveals a comparability between Databricks’ LLM and totally different open-source LLMs.

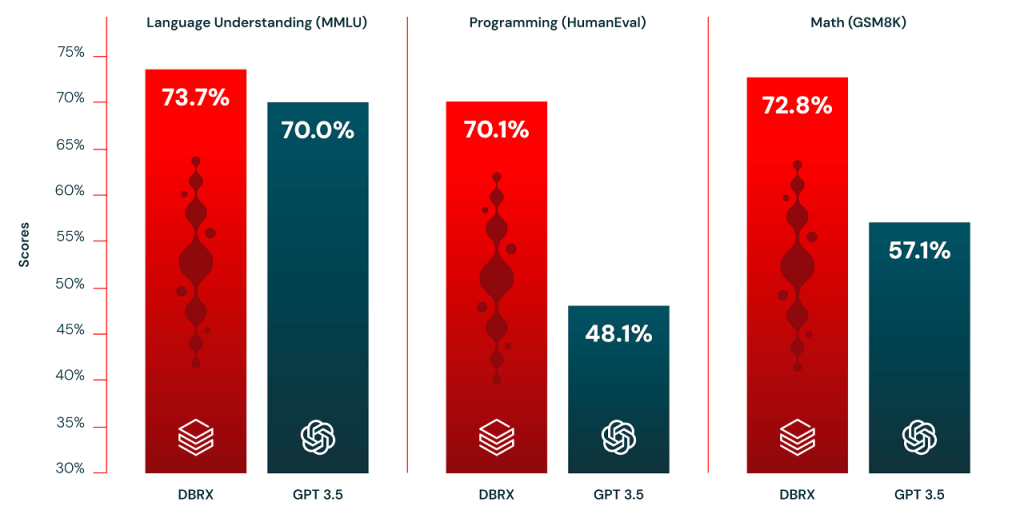

It moreover outperforms GPT-3.5 on the equivalent benchmarks as seen inside the decide beneath:

It outperforms its rivals on various key benchmarks:

- Language Understanding: DBRX achieves a score of 73.7%, surpassing GPT-3.5 (70.0%), Llama 2-70B (69.8%), Mixtral (71.4%), and Grok-1 (73.0%).

- Programming: It demonstrates a significant lead with a score of 70.1%, compared with GPT-3.5’s 48.1%, Llama 2-70B’s 32.3%, Mixtral’s 54.8%, and Grok-1’s 63.2%.

- Math: It achieves a score of 66.9%, edging out GPT-3.5 (57.1%), Llama 2-70B (54.1%), Mixtral (61.1%), and Grok-1 (62.9%).

DBRX moreover claims that for SQL-related duties, it has surpassed GPT-3.5 Turbo and is tough GPT-4 Turbo. It is also a primary model amongst open fashions and GPT-3.5 Turbo on Retrieval Augmented Period (RAG) duties.

Availability of DBRX

DBRX is freely accessible for every evaluation and enterprise capabilities on open-source collaboration platforms like GitHub and HuggingFace.

It might be accessed by means of GitHub. It might even be accessed by means of HuggingFace. Clients can entry and work along with DBRX hosted on HuggingFace with out value.

Builders can use this new openly obtainable model launched beneath an open license to assemble on excessive of the work completed by Databricks. Builders can use its prolonged context skills in RAG methods and assemble personalized DBRX fashions on their data instantly on the Databricks platform.

The open-source LLM will probably be accessed on AWS and Google Cloud, along with straight on Microsoft Azure by means of Azure Databricks. Furthermore, it is anticipated to be obtainable by means of the NVIDIA API Catalog and supported on the NVIDIA NIM inference microservice.

Conclusion

Databricks’ introduction of DBRX marks a significant milestone on the earth of open-source LLM fashions, showcasing superior effectivity all through quite a few benchmarks. By making it open-source, Databricks is contributing to a rising movement that challenges the secretive methodology of fundamental firms inside the current generative AI progress.