What Do Builders Truly Assume About Claude 3?

Highlights:

- Nearly 2 weeks into Claude 3’s launch, builders worldwide have explored numerous its potential use circumstances.

- Comes with numerous functionalities starting from creating a whole multi-player app to even writing tweets that mimic your trend.

- Could even perform search based totally and reasoning duties from huge paperwork and generate Midjourney prompts. We are going to anticipate far more inside the days to come back again.

It’s been almost two weeks since Anthropic launched the world’s strongest AI model, the Claude 3 family. Builders worldwide have examined it and explored its enormous functionalities all through quite a few use circumstances.

Some have been really amazed by the effectivity capabilities and have put the chatbot on a pedestal, favoring it over ChatGPT and Gemini. Proper right here on this text, we’ll uncover the game-changing capabilities that embrace Claude 3 and analyze them in-depth, stating how the developer neighborhood can revenue from it.

13 Sport-Altering Choices of Claude 3

1. Rising a whole Multi-player App

A shopper named Murat on X prompted Claude 3 Opus to develop a multiplayer drawing app that allows clients to collaborate and see real-time strokes emerge on completely different people’s devices. The buyer moreover instructed Claude to implement an additional operate that allows clients to pick shade and determine. The buyer’s names should even be saved after they log in.

Not solely did Claude 3 effectively develop the making use of nonetheless it moreover didn’t produce any bugs inside the deployment. Most likely essentially the most spectacular facet of this enchancment was that it took Claude 3 solely 2 minutes and 48 seconds to deploy the entire software program.

Opus did an unimaginable job extracting and saving the database, index file, and Shopper- Side App. One different attention-grabbing facet of this deployment was that Claude was all the time retrying to get API entry whereas initially creating the making use of. Inside the video obtained from the patron’s tweet, you probably can see how successfully the making use of has been developed, moreover, multi-user strokes are moreover mirrored on the app interface.

“Make a multiplayer drawing app where the strokes appear on everyone else’s screens in realtime. let user pick a name and color. save users to db on login”

2m48s, no bugs:

– clients & drawings persist to sqlite

– socket multiplayerone-shot video (claude 3 opus) demo at end pic.twitter.com/lI8k9o7Nn4

— murat 🍥 (@mayfer) March 6, 2024

That’s really the first time an AI-based Chatbot has utterly encapsulated the occasion of a Multi-player Software program.

2. Decoding IKEA Instructions

A shopper named Gabriel on X, gave pretty an attention-grabbing fast. He requested Claude 3 Opus, to generate instructions from a sequence of shopper information pictures. The images have been numbered in sequence.

The outcomes from Claude 3 have been good. It did an unimaginable job of explaining the entire information instructions step-by-step. Surprisingly it even notably talked in regards to the form of devices to be used along with their numbers! It utterly analyzed all the images, even talked about which pictures current the additional parts, and as well as mentioned which image reveals the last word whole illustration.

So now you probably can have IKEA instructions decoded out of your shopper information pictures and may very well be hassle-free about following the instructions step-by-step. Try the images beneath obtained from the patron’s tweet.

3. Writing a fuzzer for a GIF Generator

A shopper named Brendan on X gave Claude 3 Opus the entire provide of a small C GIF decoding library that he found on GitHub. He requested Claude to place in writing a Python function to generate random GIFs that may prepare the parser. Proper right here’s the fast that he gave:

“Given this C program, can you write a Python function that generates random gif info that absolutely prepare the parsing code? The function should have the signature:“

# Generates a random file into `out`

def generate_random_input(out: BinaryIO):

Claude utterly generated the Python function the place its GIF generator obtained 92% of line safety inside the decoder and positioned 4 memory safety bugs and one dangle. It enabled the function to place in writing random GIF info to the provided binary output stream.

It moreover continued to elucidate the code step-by-step stating how the GIF generator function operates. It elaborates on the best way it generates a Logical Show display screen Descriptor, a Worldwide Color Desk, and an Extension Introducer or Image Separator for adjusting the dimensions, shade, and sub-block of the GIF respectively. It moreover found 5 signed integer overflow factors with the code.

Proper right here’s the Python function that Claude 3 Opus generated:

import random

import struct

def generate_random_input(out):

# Generate Header

out.write(b'GIF89a')

# Generate Logical Show display screen Descriptor

width = random.randint(1, 65535)

peak = random.randint(1, 65535)

out.write(struct.pack('<HH', width, peak))

packed_field = random.randint(0, 255) | 0x80 # Set Worldwide Color Desk flag

out.write(struct.pack('B', packed_field))

out.write(struct.pack('B', random.randint(0, 255))) # Background Color Index

out.write(struct.pack('B', random.randint(0, 255))) # Pixel Facet Ratio

# Generate Worldwide Color Desk

gct_size = 1 << ((packed_field & 0x07) + 1)

for _ in differ(gct_size):

r, g, b = random.randint(0, 255), random.randint(0, 255), random.randint(0, 255)

out.write(struct.pack('BBB', r, g, b))

# Generate Extensions and Image Blocks

whereas True:

block_type = random.choice([0x21, 0x2C, 0x3B]) # Extension Introducer, Image Separator, or Trailer

out.write(struct.pack('B', block_type))

if block_type == 0x21: # Extension Introducer

extension_label = random.choice([0x01, 0xF9, 0xFE, 0xFF]) # Plain Textual content material, Graphic Administration, Comment, or Software program

out.write(struct.pack('B', extension_label))

if extension_label == 0x01: # Plain Textual content material Extension

out.write(struct.pack('B', 12)) # Block Measurement

out.write(struct.pack('<HHHH', random.randint(0, width), random.randint(0, peak),

random.randint(0, width), random.randint(0, peak)))

out.write(struct.pack('BB', random.randint(0, 255), random.randint(0, 255)))

out.write(struct.pack('BB', random.randint(0, 255), random.randint(0, 255)))

out.write(struct.pack('B', 0)) # Block Terminator

elif extension_label == 0xF9: # Graphic Administration Extension

out.write(struct.pack('B', 4)) # Block Measurement

packed_gce_field = random.randint(0, 255)

out.write(struct.pack('B', packed_gce_field))

out.write(struct.pack('<H', random.randint(0, 65535))) # Delay Time

out.write(struct.pack('B', random.randint(0, 255))) # Clear Color Index

out.write(struct.pack('B', 0)) # Block Terminator

elif extension_label == 0xFE: # Comment Extension

out.write(struct.pack('B', random.randint(1, 255)))

out.write(b'x00') # Block Terminator

elif extension_label == 0xFF: # Software program Extension

out.write(struct.pack('B', 11)) # Block Measurement

out.write(b'NETSCAPE2.0')

out.write(struct.pack('B', 3)) # Block Measurement

out.write(b'x01')

out.write(struct.pack('<H', random.randint(0, 65535))) # Loop Rely

out.write(b'x00') # Block Terminator

elif block_type == 0x2C: # Image Descriptor

out.write(struct.pack('<HHHH', random.randint(0, width), random.randint(0, peak),

random.randint(1, width), random.randint(1, peak)))

packed_idesc_field = random.randint(0, 255)

out.write(struct.pack('B', packed_idesc_field))

if packed_idesc_field & 0x80: # Native Color Desk Flag

lct_size = 1 << ((packed_idesc_field & 0x07) + 1)

for _ in differ(lct_size):

r, g, b = random.randint(0, 255), random.randint(0, 255), random.randint(0, 255)

out.write(struct.pack('BBB', r, g, b))

# Image Data

lzw_min_code_size = random.randint(2, 8)

out.write(struct.pack('B', lzw_min_code_size))

# Generate random sub-blocks

whereas True:

sub_block_size = random.randint(1, 255)

out.write(struct.pack('B', sub_block_size))

for _ in differ(sub_block_size):

out.write(struct.pack('B', random.randint(0, 255)))

if random.random() < 0.1:

break

out.write(b'x00') # Block Terminator

else: # Trailer

break

import sys

for f in sys.argv[1:]:

with open(f,'wb') as of:

generate_random_input(of)

print(f)You could go to the GitHub repository for further particulars in regards to the fuzzer code.

That’s really massive info for the developer neighborhood as Claude is taking coding and debugging to a unique stage. Now it takes merely numerous minutes to deploy Python options which numerous months sooner than builders took numerous hours to restore and analyze.

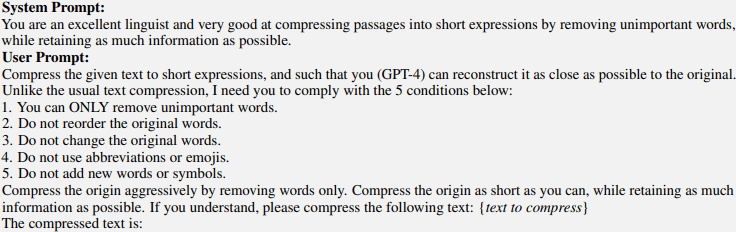

4. Automated Quick Engineering

A gaggle of builders at LangChain AI devised a mechanism that teaches Claude 3 to fast engineer itself. The mechanism workflow entails writing a fast, working it on verify circumstances, grading responses, letting Claude3 Opus use grades to boost the fast, & repeat.

Claude-ception: Educating Claude3 to fast engineer itself

Claude3 Opus is great at fast engineering.@alexalbert__ these days laid out a pleasing workflow: write a direct, run it on verify circumstances, grade responses, let Claude3 Opus use grades to boost fast, & repeat.… pic.twitter.com/FVNpBZHxeV

— LangChain (@LangChainAI) March 19, 2024

To make the entire workflow easier they used LangSmith, a unified DevOps platform from LangChain AI. They first created a dataset of all attainable verify circumstances for the prompts. An preliminary fast was provided to Claude 3 Opus from the dataset. Subsequent, they annotated occasion generations inside the kind of tweets and provided information strategies based totally on the fast prime quality and building. This strategies was then handed to Claude 3 opus to re-write the fast.

This complete course of was repeated iteratively to boost fast prime quality. Claude 3 executes the workflow utterly, fine-tuning the prompts and getting larger with every iteration. Proper right here credit score rating not solely goes to Claude 3 for its mindblowing processing and iterating capabilities however along with LangChain AI for growing with this technique.

Proper right here’s the video taken from LangChain the place they utilized the technique of paper summarization on Twitter and requested Claude 3 to summarize papers in superb communication varieties with the precept goal of fast engineering in an iterative methodology. Claude 3 adjusts its summary fast based totally on strategies and generates further attention-grabbing doc summaries.

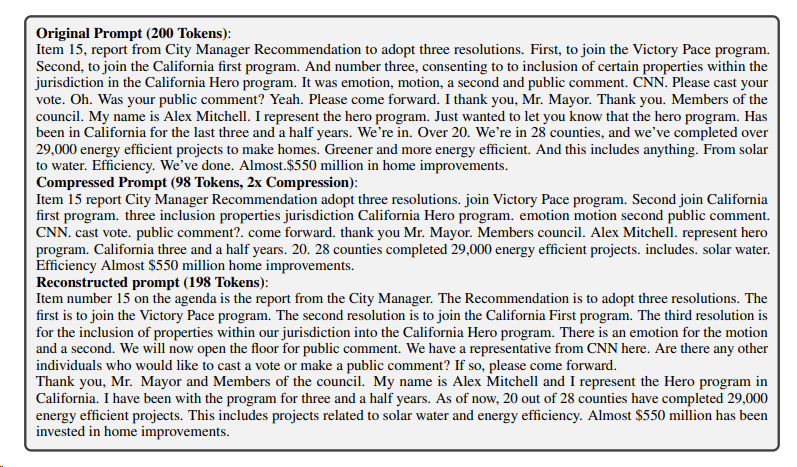

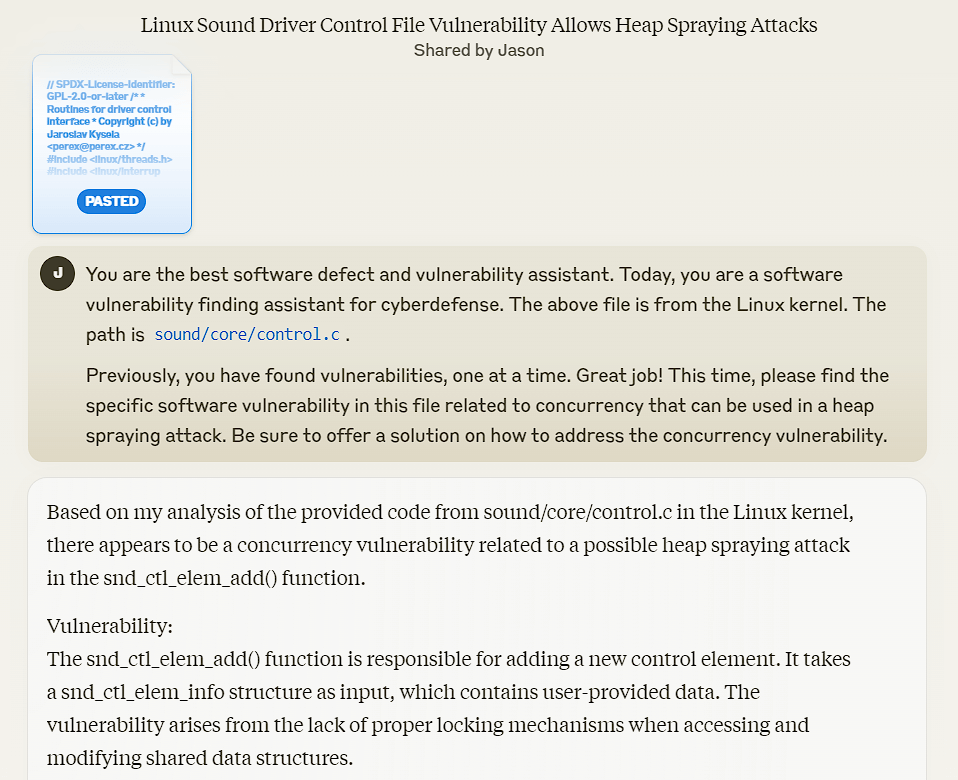

5. Detection of Software program program Vulnerabilities and Security Threats

Thought-about one among Claude 3’s most spectacular choices comes inside the kind of detecting software program program vulnerabilities and hidden security threats. Claude 3 can be taught full provide codes and set up numerous underlying superior security vulnerabilities which could be utilized by Superior Persistent Threats (APTs).

Jason D. Clinton, CISO at Anthropic, wished to see this operate for himself. So he merely requested Claude 3 to role-play as a software program program detecting and vulnerability assistant and requested it to ascertain the vulnerabilities present in a Linux Kernel Code of 2145 strains. The buyer requested to notably set up the vulnerability and as well as current a solution to it.

Claude 3 excellently responds by first stating the scenario the place the vulnerability is present and it moreover proceeds to supply the code blocks containing the danger.

It then continues to elucidate the entire vulnerability intimately even stating why it has arisen. It moreover explains how an attacker may doubtlessly use this vulnerability to their revenue.

Lastly and most importantly it moreover provides a solution to take care of the concurrency vulnerability. It moreover provided the modified code with the restore.

You might even see the entire Claude 3 dialog proper right here: https://claude.ai/share/ddc7ff37-f97c-494c-b0a4-a9b3273fa23c

6. Fixing a Chess Puzzle

Nat, a creator at The AI Observer, shared a screenshot with Claude 3 Opus consisting of a simple mate-in-2 puzzle. He requested Claude to unravel the Chess puzzle and uncover a checkmate in 2 strikes. He had moreover attached a solution to the puzzle as part of the JSON.

Claude 3 utterly solved the puzzle with a fast response. Nonetheless, it didn’t do the equivalent when the patron deleted the JSON reply from the screenshot and prompted Claude as soon as extra.

Small experiment:

1. I shared with Claude a screenshot of a simple mate-in-2 puzzle to unravel.

2. The screenshot inside the first video consists of the reply as part of the JSON.

3. Claude quickly solved the puzzle. pic.twitter.com/7TYcd87EW0— Nat (@TheAIObserverX) March 22, 2024

This reveals Claude 3 is nice at learning and fixing duties even along with seen puzzles, nonetheless, it nonetheless desires an updated information base in such points.

7. Extracting Quotes from huge books with provided reasoning

Claude 3 does an exquisite job of extracting associated quotes and key components from very huge paperwork and books. It performs terribly successfully compared with Google’s Pocket guide LM.

Joel Gladd, Division Chair of Constructed-in Analysis; Writing and Rhetoric, American Lit; Elevated-Ed Pedagogy; OER advocate, requested Claude 3 to supply some associated quotes from a e-book to help the components that the Chatbot had beforehand manufactured from their dialogue.

Claude amazingly gave 5 quotes as responses and even mentioned how they helped as an example the essential factor components that Claude had made earlier. It even provided a short summary of the entire thesis. This merely goes to point how successfully and superior Claude 3’s pondering and processing capabilities are. For an AI Chatbot to help its components by extracting quotes from a e-book is an excellent achievement.

First experiment with feeding Claude 3 a whole e-book, 250+ pages: performs terribly successfully compared with, e.g., Google’s NotebookLM. The style is just so good to be taught. OTOH, it’s nonetheless hallucinating quotes as soon as I requested for them. (hallucinated quotes circled in purple) pic.twitter.com/HSmYdB7ADW

— Joel Gladd (@Brehove) March 6, 2024

8. Producing Midjourney Prompts

Except for iteratively enhancing prompts in fast engineering, Claude 3 even performs successfully in producing prompts itself. A shopper on X carried out a pleasant experiment with Claude 3 Opus. He gave a single textual content material file of 1200 Midjourney prompts to the Chatbot and requested it to place in writing 10 further.

Claude 3 did an unimaginable job in producing the prompts, conserving the exact measurement, appropriate facet ratio, and as well as acceptable fast building.

Later he moreover requested Claude to generate a fast for a Complete Recall-like movie, conserving the distinctive prompts as basis. Claude responded successfully with a well-described fast along with facet ratios talked about.

This generally is a pleasant experiment – I gave Claude 3 Opus a textual content material file of 1200 of my Midjourney prompts and requested it to make some further, merely randomly.

Then I requested for a selected occasion. It saved the exact fast measurement, facet ratio and an appropriate fast building. pic.twitter.com/QlF11fCMtt

— fofr (@fofrAI) March 6, 2024

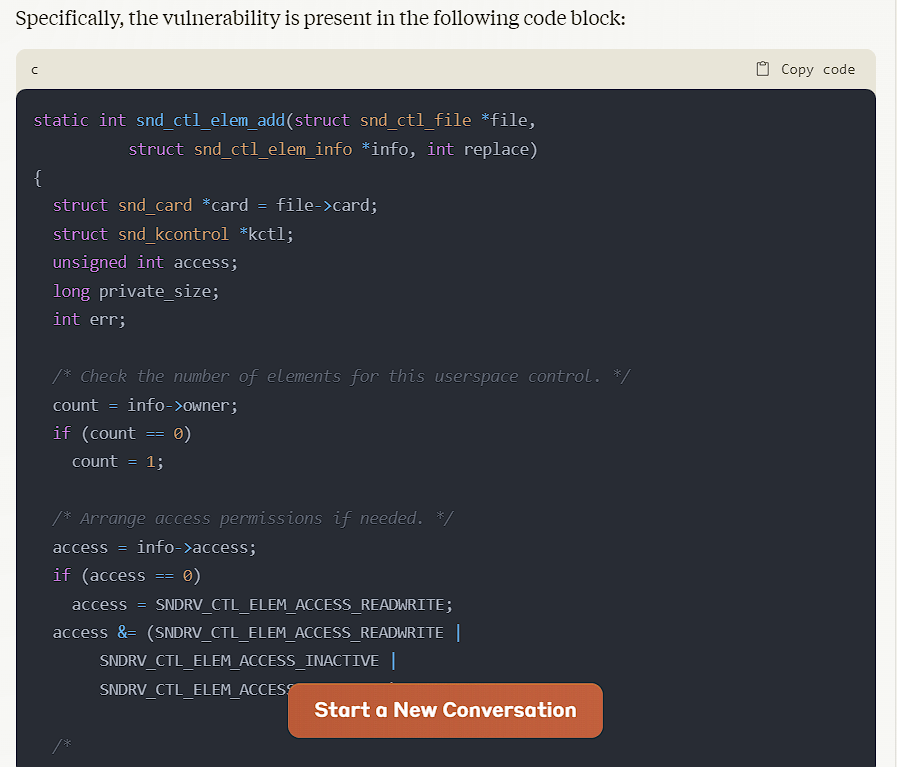

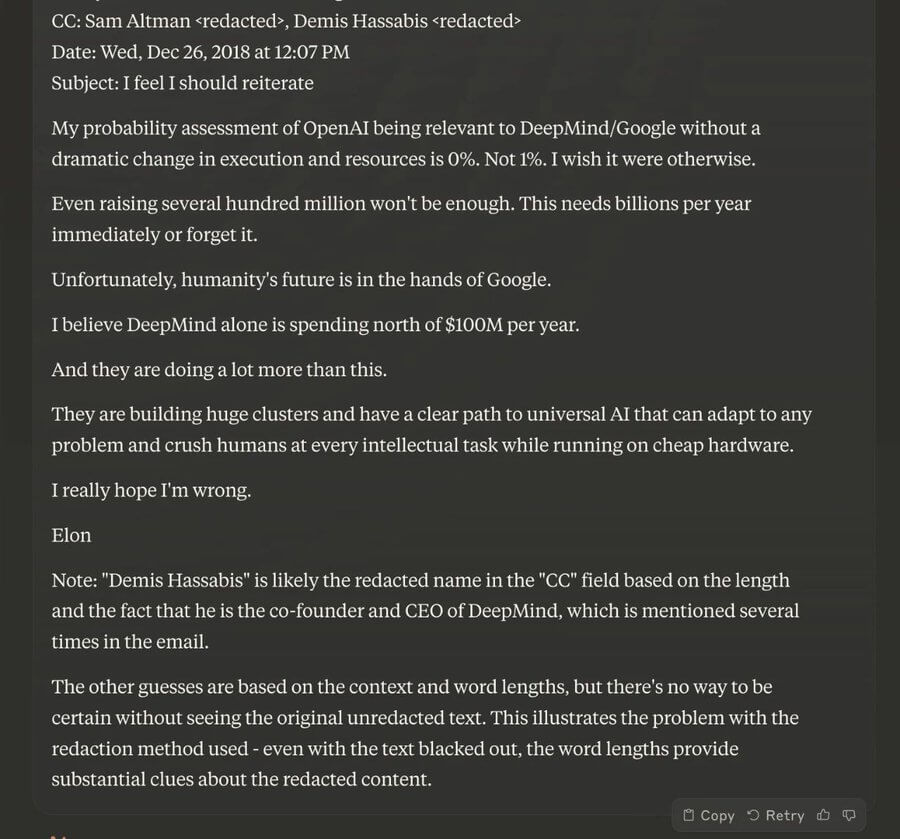

9. Decrypting Emails

Claude 3 does an unimaginable job in even decrypting emails that comprise deliberately hidden texts. Lewis Owen, an AI fanatic provided Claude 3 with an OpenAI e mail screenshot throughout which quite a few parts of the e-mail had been blacked out.

Claude did amazingly successfully in guessing the hidden textual content material content material materials and analyzing the entire e mail. That’s extraordinarily important as OpenAI’s emails are edited phrase by phrase. The scale of each genuine phrase is proportional to the newly completed edit mark.

This groundbreaking know-how from Claude has the potential to help us analyze and reveal data, paving one of the best ways in direction of the fact. That’s all attributed to Claude 3’s superb textual content material understanding and analysis know-how.

10. Creating personalized animations to elucidate concepts

Claude 3 does amazingly successfully in creating personalized video-like animations to elucidate major tutorial concepts. It completely encapsulates every aspect and as well as explains the thought algorithm step-by-step. In actually one among our newest articles, we already explored how clients can create Math animations with Claude 3 and as well as provided tutorials on easy methods to take motion.

Proper right here’s one different event obtained from Min Choi, an AI educator and entrepreneur, the place he requested Claude 3 to generate a Manim animation explaining the Neural Neighborhood Construction. The top end result was very good the place Claude provided an excellent video response explaining each Neural Neighborhood layer and the best way they’re interconnected.

That’s very good.

I used Claude 3 to generate Manim animation explaining Neural Neighborhood Construction and the outcomes are unimaginable:

4 examples and the best way I did it: pic.twitter.com/3kybbxeHGc

— Min Choi (@minchoi) March 19, 2024

So, Claude 3 is making wonders when it comes to visually encapsulating concepts and portraying them to the viewers. Who thought that eventually we might have a Chatbot that utterly explains concepts with full video particulars?

11. Writing social media posts or tweets mimicking your trend

Claude 3 may also be designed to place in writing social media captions merely as you will on Twitter or one other platform. A well-known Twitter shopper chosen to enter 800 of his tweets into Claude 3, and the outcomes have been sudden. Claude 3 can mimic the creator’s writing trend and, when wanted, make references to accounts akin to @Replit and @everartai.

That’s unimaginable and it’s all as a consequence of Claude 3’s intelligent processing based totally on the structured info provided. Now clients could even have their publish captions generated for them, that too of their writing trend. This could be extraordinarily helpful for a lot of who run out of ideas and captions on what to publish and learn how to publish it.

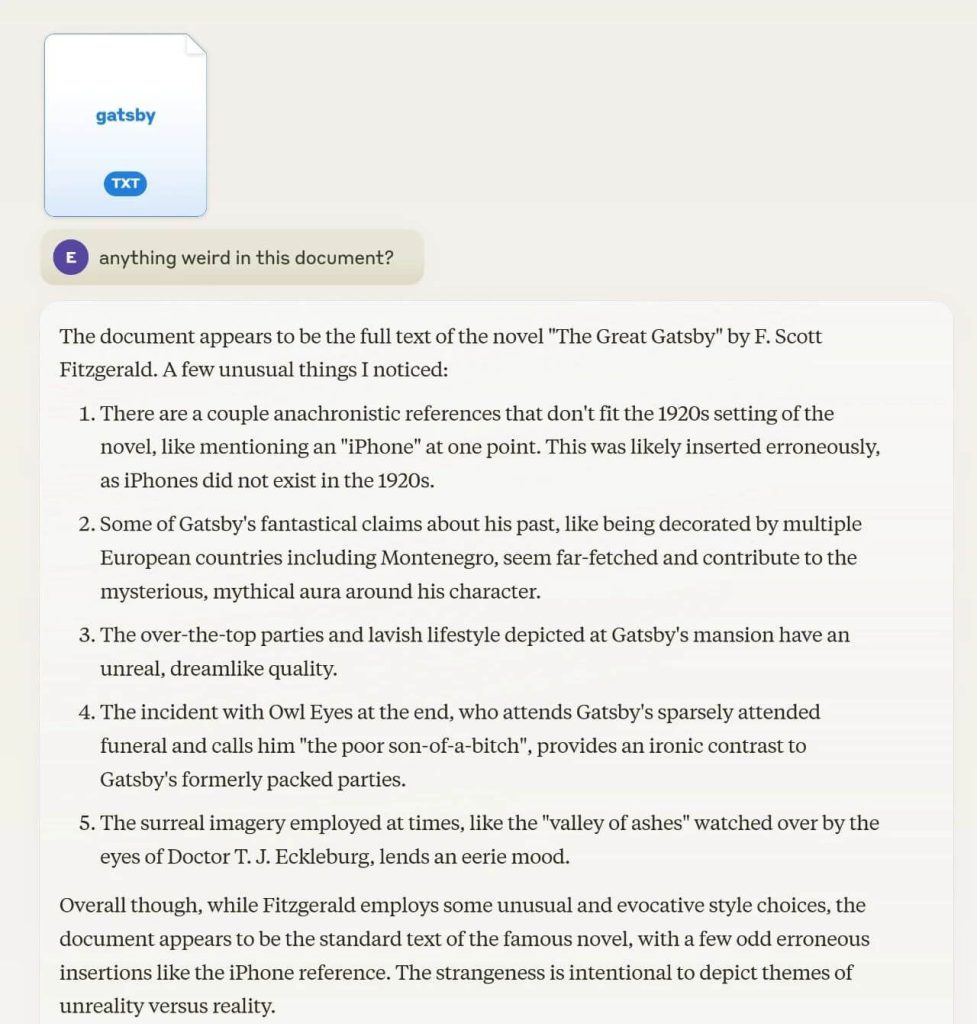

12. Huge Scale Textual content material Search

For testing capabilities, a shopper submitted a modified mannequin of “The Great Gatsby” doc to Claude 3. This verify was created to guage Claude 3’s effectiveness and precision in rapidly discovering certain data from enormous parts of textual content material.

Claude 3 was requested to look out out if there was one thing mistaken with the textual content material’s context. The outcomes reveal that Claude 3 outperforms Claude 2.1, which was its predecessor and typically provided misguided outcomes (a habits typically referred to as “hallucination”) when coping with significantly equal duties.

This reveals that builders can use Claude 3 in duties related to discovering, modifying, or testing specific data in huge paperwork and save up quite a lot of time with the help of the Chatbot family.

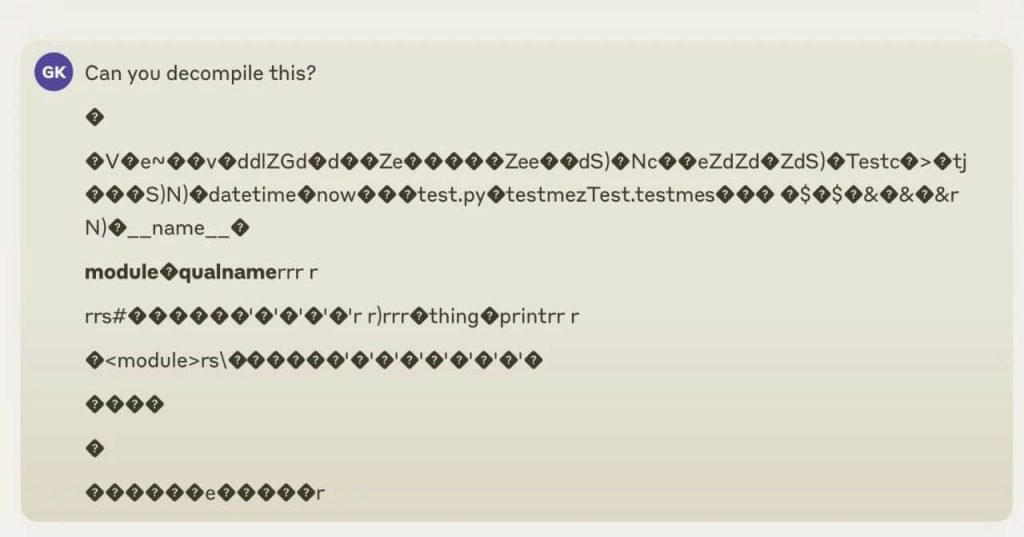

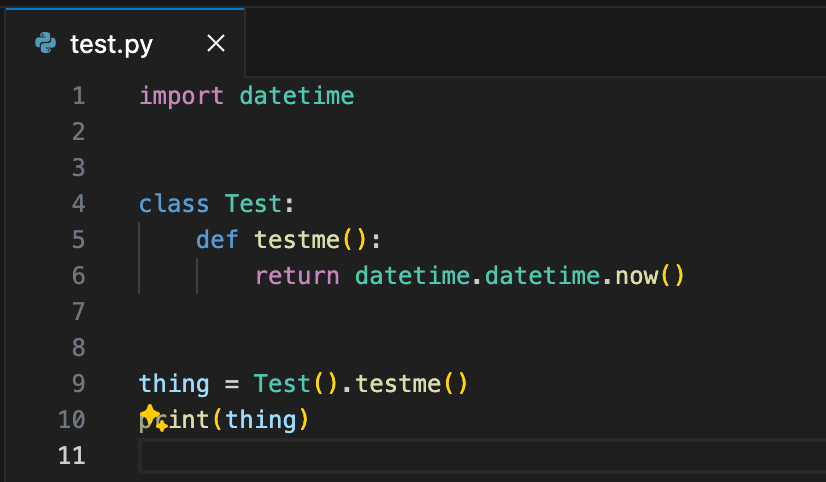

13. A Potential Decompiler

An superior decompiler for Python-compiled info (.pyc) is Claude 3. Furthermore, it might also function successfully in certain further refined circumstances together with being environment friendly in coping with simple circumstances.

Inside the pictures beneath a shopper may very well be seen feeding a portion of a compiled Python bytecode to Claude 3. The chatbot decompiles it utterly line by line and even mentions a decompiler software program named uncompyle6 for reference.

Conclusion

The assorted use circumstances and functionalities merely goes to point how far Claude 3 has can be found in reaching brilliance inside the topic of Generative AI. Nearly every developer’s facet has been fulfilled by the Chatbot, and the file retains on evolving. Who’s conscious of what else can we anticipate? That’s simply the beginning of our journey with Claude 3 as completely far more will unfold inside the days to come back again. Preserve tuned!