An AI That Can Clone Your Voice

On March 29th, 2024, OpenAI leveled up its Generative AI recreation when it unveiled its brand-new voice cloning system, Voice Engine. This system brings cutting-edge know-how that will clone your voice in merely 15 seconds.

Highlights:

- OpenAI unveils Voice Engine, an AI that will clone any particular person’s voice.

- Comes with a variety of choices resembling translation and assist with finding out.

- In the mean time in preview mode and solely rolled out to a few firms, holding safety pointers in ideas.

We’re sharing our learnings from a small-scale preview of Voice Engine, a model which makes use of textual content material enter and a single 15-second audio sample to generate natural-sounding speech that intently resembles the distinctive speaker. https://t.co/yLsfGaVtrZ

— OpenAI (@OpenAI) March 29, 2024

OpenAI has been pretty on the switch in bringing a revolution to the Gen AI enterprise. After Sora, the state-of-the-art video period AI model, that’s yet another most important growth from OpenAI, which may disrupt the world of AI followers and builders.

What’s OpenAI’s Voice Engine and the best way can builders benefit from out of this system? What are the choices that embrace it? Let’s uncover them out in-depth!

What’s Voice Engine from OpenAI?

The well-known artificial intelligence company OpenAI has entered the voice assistant market with Voice Engine, its most modern invention. With merely 15 seconds of recorded speech from the subject, this state-of-the-art know-how can exactly mimic an individual’s voice.

The occasion of Voice Engine began in late 2022, and OpenAI has utilized it to vitality ChatGPT Voice and Study Aloud, together with the preset voices that are on the market throughout the text-to-speech API.

All that Voice Engine needs is a short recording of your talking voice and some textual content material to be taught, then it could effectively generate a reproduction of your voice. The voices are surprisingly of extraordinarily actual trying prime quality and likewise characterize emotions to an extreme diploma.

This extraordinarily trendy know-how from OpenAI appears to wrestle a variety of deep fakes and illegal voice period worldwide, which has been a significant problem to date. Give the system 15 seconds of your audio sample, and it will generate a extraordinarily distinctive natural-sounding speech in your precise voice.

How was Voice Engine expert?

A mix of licensed and overtly accessible info models was used to educate OpenAI’s Voice Engine model. Speech recordings serve as an example for fashions such as a result of the one which powers Voice Engine, which is expert on a vast amount of data models and publicly accessible internet sites.

Jeff Harris, a member of the product staff at OpenAI, instructed TechCrunch in an interview that Voice Engine’s generative AI model has been working covertly for some time. Since teaching info and related information are worthwhile belongings for lots of generative AI distributors, they generally tend to keep up them confidential.

Nonetheless, one other excuse to not current loads of particulars about teaching info is that it might presumably be the subject of IP-related disputes. That is doubtless one of many most important causes that quite a bit teaching information has not been provided on Voice Engine’s AI model. Nonetheless, we are going to rely on an in depth technical report shortly from OpenAI, giving deep insights into the model’s assemble, dataset, and construction.

What’s fascinating is that Voice Engine hasn’t been expert or optimized using particular person info. That’s partially due to the transient nature of speech period produced by the model, which mixes a transformer and a diffusion course of. The model creates a corresponding voice with out the need to create a singular model for each speaker by concurrently evaluating the textual content material info supposed for finding out aloud and the speech info it takes from.

We take a small audio sample and textual content material and generate actual trying speech that matches the distinctive speaker. The audio that’s used is dropped after the request is full.

Harris instructed TechCrunch throughout the interview referring to Voice Engine.

Making an attempt Into Voice Engine’s Choices

OpenAI’s voice engine comes with a variety of choices that are primarily constructed spherical cloning actual trying particular person voice. Let’s look into these choices intimately:

1. Aiding With Finding out

Voice Engine’s audio cloning capabilities could be extraordinarily helpful to children and faculty college students as a result of it makes use of actual trying, expressive voices that convey a greater variety of speech than could be achieved with preset voices. The system has a extreme potential to produce actual trying interactive finding out and finding out courses which can extraordinarily bolster the usual of coaching.

A company named Age Of Finding out has been using GPT-4 and Voice Engine to reinforce finding out and finding out experience for a quite a bit wider variety of viewers.

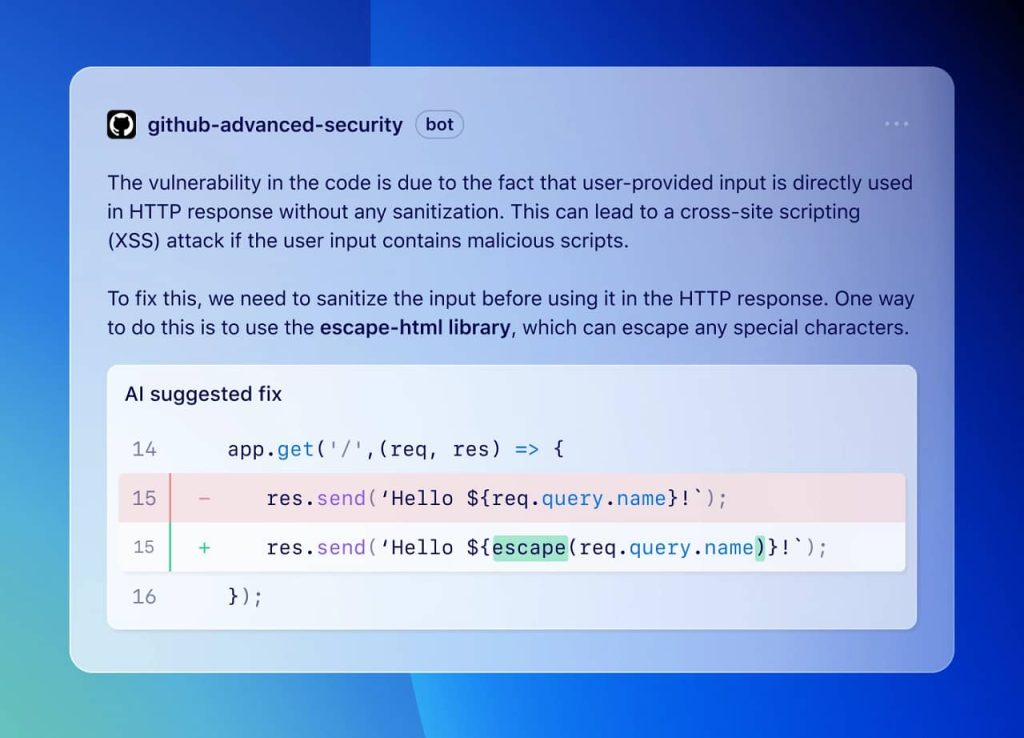

Throughout the tweet beneath, you’ll see how the reference audio is being cloned by Voice Engine to indicate various subjects resembling Biology, Finding out, Chemistry, Math, and Physics.

OpenAI, ses klonlama aracı Voice Engine’i tanıttı.

15 saniyelik kısa bir sesle, insan seslerini gerçekçi bir şekilde kopyalayabiliyor ve yazılan metinleri sese çevirebiliyor.pic.twitter.com/6yNhhEGvxe

— BPT (@bpthaber) March 30, 2024

2. Translating Audio

Voice Engine can take an individual’s voice enter after which translate it into various a variety of languages which could be communicated or reached to a better number of audiences and communities.

Voice Engine maintains the distinctive speaker’s native accent when translating; for example, if English is generated using an audio sample from a Spanish speaker, the result could be Spanish-accented speech.

A company named HeyGen, an AI seen storytelling agency is at current using OpenAI’s Voice Engine to translate audio inputs into a variety of languages, for various content material materials and demos.

Throughout the tweet beneath, you’ll see how the enter reference voice in English is being translated into Spanish, Mandarin, and way more.

OpenAI公布其语音生成模型:Voice Engine

根据文本输入和一个15秒的音频样本,就能生成接近原始说话者声音的自然听起来的语音。

Voice Engine最初于2022年底开发,并已经提供给包括Heygen在内的少数公司进行测试性使用。

主要功能

1、自然听起来的语音生成:利用单个15秒的音频样本,Voice… pic.twitter.com/AjP2wAYr4N

— 小互 (@imxiaohu) March 30, 2024

3. Connecting with Communities all by the World

Giving interactive solutions in each worker’s native tongue, resembling Swahili, or in extra colloquial languages like Sheng—a code-mixed language that is also used in Kenya—is possible with Voice Engine and GPT-4. This may very well be a extraordinarily useful operate to reinforce provide in distant settings.

Voice Engine is making it potential to reinforce the usual of life and restore in distant areas, who for prolonged haven’t had entry to the most recent gen AI fashions and their utilized sciences.

4. Serving to Non-Verbal People

Individuals who discover themselves non-verbal can extraordinarily make use of Voice Engine, to unravel their day-to-day factors. The AI varied communication app Livox drives AAC (Augmentative & Numerous Communication) models, which facilitate communication for these with disabilities. They will current nonverbal people with distinct, human voices in various languages by utilizing Voice Engine.

Prospects who talk a few language can select the speech that almost all exactly shows them, and to allow them to protect their voice fixed in all spoken languages.

Voice Engine

ثورة OpenAI في تكنولوجيا الصوت الذكيOpenAI أعلنت عن إطلاق نموذج صوتي جديد يسمى “Voice Engine”، الذي يمكنه توليد أصوات طبيعية تشبه صوت الشخص من خلال مجرد 15 ثانية من عينة صوتية. هذا النموذج قد تم استخدامه بالفعل من قبل شركاء كبار مثل HeyGen.

▪️أبرز النقاط حول Voice… pic.twitter.com/TxrVPQPYw4

— سعيد الكلباني (@smalkalbani) March 29, 2024

5. Aiding Victims in Regaining Voice

Voice Engine may be very helpful for people who endure from sudden or degenerative voice conditions. The AI model is being provided as part of a trial program by the Norman Prince Neurosciences Institute at Lifespan, a not-for-profit nicely being institution that is the vital educating affiliate of Brown Faculty’s medical faculty that treats victims with neurologic or oncologic aetiologies for speech impairment.

Using audio from a film shot for a school enterprise, medical medical doctors Fatima Mirza, Rohaid Ali, and Konstantina Svokos had been able to restore the voice of a youthful affected one who had misplaced her fluent speech owing to a vascular thoughts tumor, since Voice Engine required solely a brief audio sample.

Basic, Voice Engine’s cloning capabilities extend far previous merely simple audio period, as a result of it covers a big aspect of use situations benefitting the youth, varied communities, and non-verbal victims with speech factors. OpenAI has made pretty the daring switch in creating a tool that could be of quite a bit use to people worldwide, with its magical “voice” choices.

Is Voice Engine Accessible?

OpenAI’s announcement of Voice Engine, which hints at its intention to advance voice-related know-how, follows the submitting of a trademark utility for the moniker. The company has chosen to restrict Voice Engine’s availability to a small number of early testers within the interim, citing worries over potential misuse and the accompanying risks, whatever the know-how’s doubtlessly revolutionary potential.

In keeping with our approach to AI safety and our voluntary commitments, we’re choosing to preview nevertheless not extensively launch this know-how presently. We hope this preview of Voice Engine every underscores its potential and likewise motivates the need to bolster societal resilience in opposition to the challenges launched by ever further convincing generative fashions.

OpenAI stated the limiting use of Voice Engine of their latest blog.

Solely a small group of firms have had entry to Voice Engine, and so they’re using it to help a variety of groups of people, we already talked about a number of of them intimately. Nonetheless we are going to rely on the system to be rolled out publicly throughout the months to return.

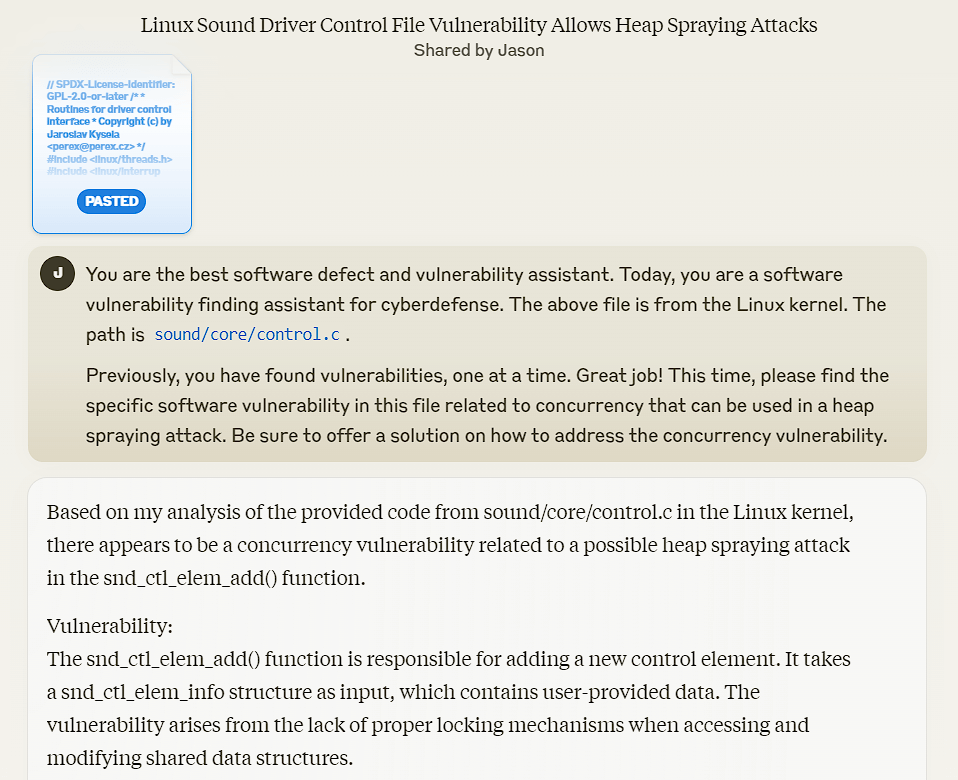

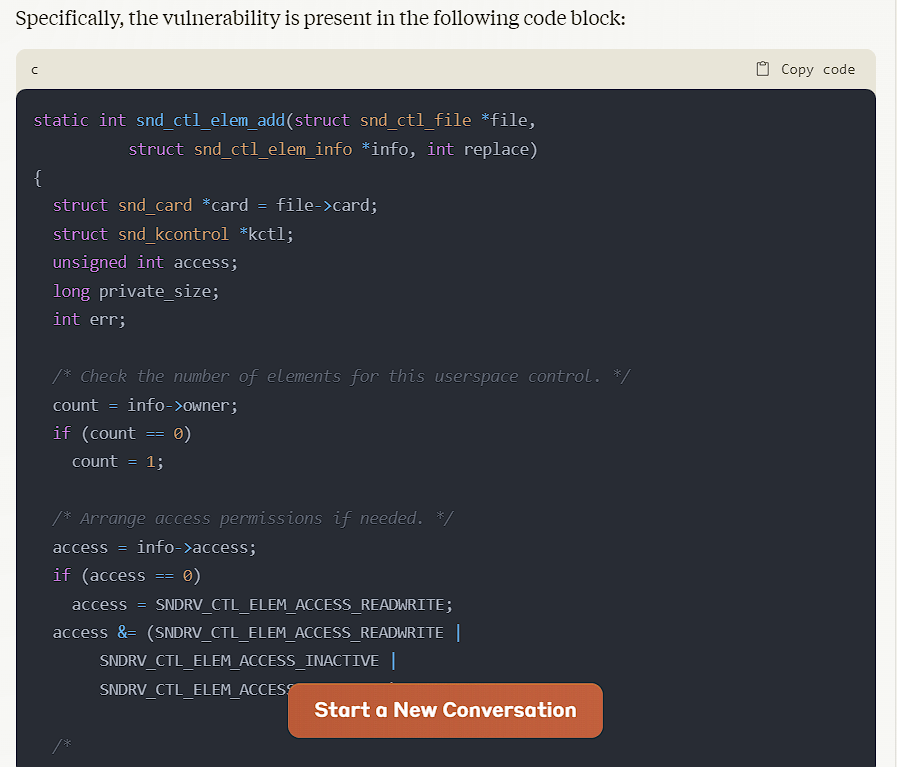

How is OpenAI tackling the misuse of “Deepfakes” with Voice Engine?

Recognizing the extreme risks associated to voice mimicking, notably on delicate occasions like elections, OpenAI highlights the necessity of using this know-how responsibly. The need for vigilance is significant, as seen by present occurrences like robocalls that mimic political personalities with AI-generated voices.

Given the extreme penalties of producing a speech that sounds masses like people, notably all through election season, the enterprise revealed how they’re taking preventative measures to mitigate these dangers.

We acknowledge that producing speech that resembles people’s voices has extreme risks, which can be notably prime of ideas in an election 12 months. We’re collaborating with U.S. and worldwide companions from all through authorities, media, leisure, coaching, civil society, and previous to ensure we’re incorporating their solutions as we assemble.

OpenAI

The company moreover launched a set of safety measures resembling using a watermark to trace the origin of any audio generated by Voice Engine, and likewise monitor how the audio is getting used. The companies using Voice Engine at current are moreover required to stay to OpenAI’s insurance coverage insurance policies and neighborhood pointers which comprise asking for consent from the person whose audio is getting used and likewise informing the viewers that Voice Engine’s audio is AI-generated.

Conclusion

Voice Engine from OpenAI holds a profound potential to change the panorama of audio period perpetually. The creation and utility of utilized sciences like Voice Engine, which present every beforehand unheard-of potential and difficulties, are anticipated to have an effect on the trail of human-computer interaction as OpenAI continues to advance throughout the space of artificial intelligence. Solely time will inform how the system could be publicly perceived worldwide.